Facebook has developed a number of hypotheses about human behavior, using its popular news feed as a test bed and the underlying algorithm as an independent variable that changes multiple times per year.

In the latest experiment, the Menlo Park, Calif.-based technology company announced a shift to focus on local news more. Now the question is: How will the dependent variables examined — all of us, effectively guinea pigs — react?

According to a Carnegie Mellon University professor researching the spread of social information, Facebook hasn’t a clue.

Not understanding how or why the news is reaching us can be problematic, as illustrated by the fake news epidemic. And the problem scales: In 2016, the Pew Research Center found 62 percent of adults relied on social media to digest the news.

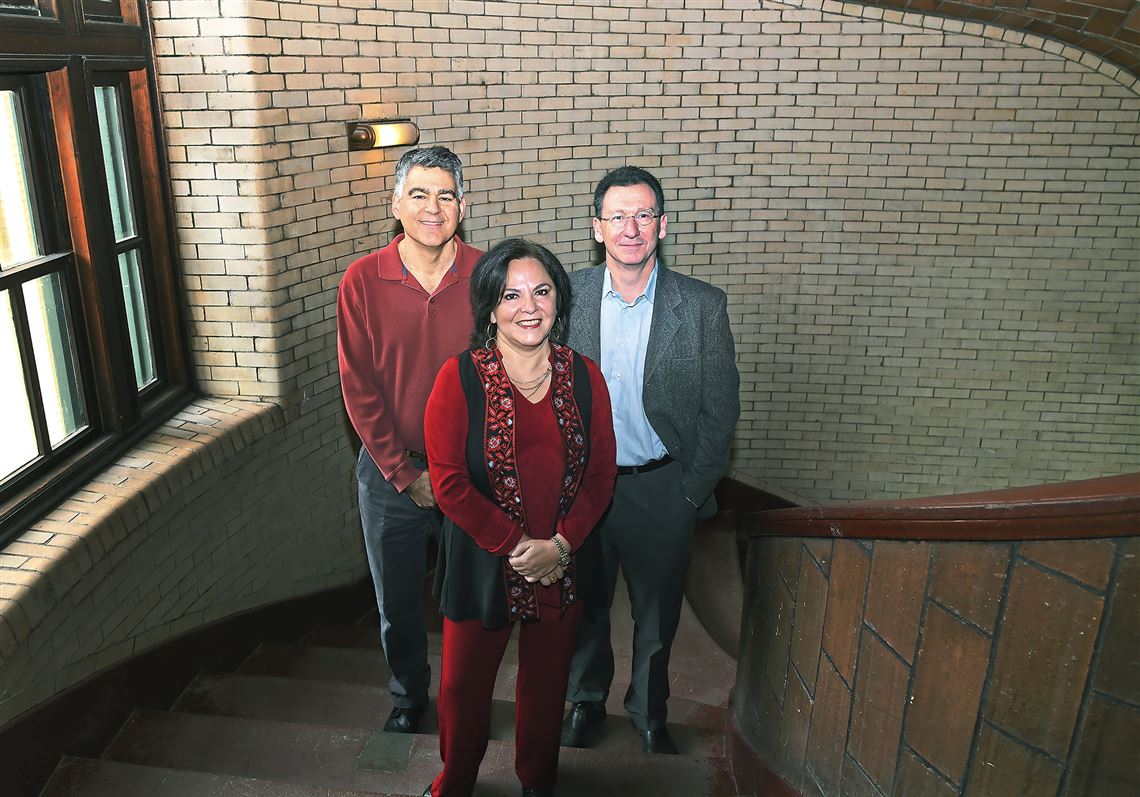

“Maybe they have better theories than I’ve heard, but [Facebook is] largely operating by trial and error,” said Christian Lebiere, a research psychologist in CMU’s Dietrich College of Humanities and Social Sciences and the principal investigator for CMU’s portion of a federally funded project called SocialSim.

The project will develop technologies to create an accurate, scaled simulation of online social behavior, funded by a $6.7 million grant over four years from the Defense Advanced Research Projects Agency (DARPA) in November.

The team is led by Virginia Tech researchers, supplemented by three CMU experts and others at Stanford, Claremont, Duke, Wisconsin and the University of Southern California.

In the future, SocialSim could help explain why you’re seeing articles from the Pittsburgh Post-Gazette in your news feed, rather than from a website that you don’t recognize. Or, Mr. Lebiere pointed out, it could even help the U.S. government understand how foreign enemies use the internet to spread rumors.

Smart simulations

Mr. Lebiere’s team is developing the simulation for the SocialSim project. That is, they’re building a model that mimics human behavior, which they can then feed a scenario and examine the results (no humans required).

Imagine having a computer program that could analyze any situation and how the general public will respond — like a change in the Facebook algorithm. That’s the model.

To create it, the team will build a “cognitive architecture,” or reproduction of how humans think.

“[Cognitive architectures] are meant to represent, at some level, the computational processes that go on as we perceive the world, as we act on the world, as we make decisions and as we plan to solve a problem,” Mr. Lebiere said.

One successful example is CMU’s “Act-R” cognitive architecture, which was completed for release in 2014. It essentially breaks down the human mind into a series of operations.

Act-R has been used to create models in hundreds of scientific publications, and it’s been applied for use in cognitive tutors that mimic the behavior of a student — personalizing his or her curriculum and instructions based on any difficulties the pupil is experiencing.

The SocialSim simulation will rely on a huge amount of decision-making by millions of people — all without modeling each individual.

The challenge of SocialSim, in Mr. Lebiere’s opinion, is to develop a good understanding of human thought processes and then scale that to a societal level to see how large collections of individuals act as a collective. He offered an analogy to physics.

“You have a vat of gas, and you have molecules of gas,” he said. “The individual molecules all obey particular forces, but you can write an equation of the overall behavior of that mass of gas without referring to the individual molecules because that behavior will average in particular ways.”

Of course, people are a lot more complex than molecules, but it’s the same idea.

Stopping rumors, saving lives

The resulting model won’t be perfect, Mr. Lebiere said, but it will be useful. SocialSim should be useful in better understanding how U.S. adversaries are operating online, for one.

In a report, DARPA noted the U.S. government currently employs small teams of experts to speculate how information might be spreading online, but those teams work slowly, their accuracy is unknown, and ideas can only be scaled to represent a fraction of the real world.

Disaster relief campaigns could be more effective with a useful model of human behavior. The spread of rumors about U.S. military efforts in foreign nations can hinder the very help they’re providing, Mr. Lebiere pointed out.

For example, a rumor that the bottled water the U.S. military brought to Haiti was contaminated with “mind control” substances could keep the survivors from drinking fresh water. If researchers understood how this false information spread, it might be possible to prevent it in the future.

In another scenario, a simulation of human behavior could allow the U.S. to solve conflicts without using violent force, he said.

And, of course, there’s always the possibility of understanding how fake news is spread — and stopping it.

In a commercial application, a company such as Facebook might use SocialSim to understand the real-world ramifications of a change in software.

“How is the algorithm change going to impact how the information spreads?” Mr. Lebiere asked. “The scale of human behavior is really playing out in this program.”

Courtney Linder: clinder@post-gazette.com or 412-263-1707. Twitter: @LinderPG.

Join the Tech.pgh Community!

Sign up for Courtney Linder's free newsletter.

First Published: February 6, 2018, 5:15 p.m.