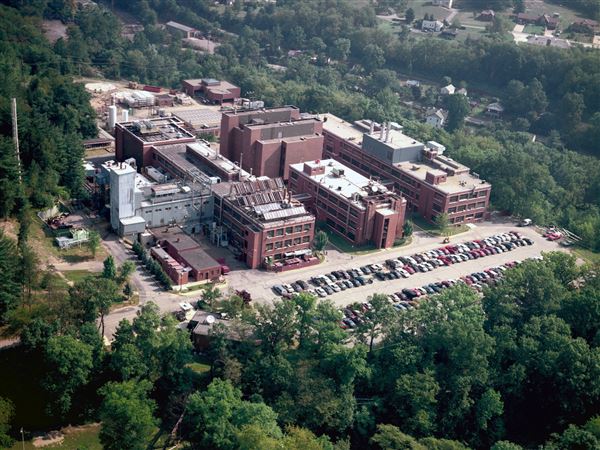

At Carnegie Mellon University, professors and students intent on toning down the online vitriol gained new motivation from the massacre a year ago and a mile from campus.

“I was actually driving through Squirrel Hill after having breakfast with friends,” on the morning of Oct. 27, 2018, said Geoff Kaufman, an assistant professor at CMU’s Human-Computer Interaction Institute. “A friend of mine lived just a block away from Tree of Life. So it was a pretty poignant day.”

He’s one of a cluster of researchers, each backed by talented students, dedicated to finding the right way to improve the tone on social media, which has increasingly been identified as a breeding ground for extremism and violence.

“If anything [Tree of Life] gave me more driving motivation to study issues of bias and inter-group violence,” he said.

At the time of the Tree of Life massacre, Kathleen M. Carley was already deep into research on the methods by which foreign powers and extremist groups exploit social media to influence elections and destabilize populations. They worm their way into existing online communities, then share disinformation and propaganda with others in the group, taking advantage of the credibility afforded them as members, said the director of CMU’s Center for Computational Analysis of Social and Organizational Systems.

“If you think it’s coming from your group, you start believing it,” she said.

People who have come to believe one conspiracy theory often can be convinced of others, she said. Once they’re in, it’s hard to pull them out of conspiracy land.

“Facts rarely work” as a counter to conspiracy theories, she said, because believers “are operating emotionally” and are predisposed to discount anything that runs counter to those feelings. Plus, she said, for some people disinformation “is just plain fun” compared to cold reality.

Sometimes mocking a piece of disinformation is more effective than countering it with facts, she added.

She and other technologists have developed “bot hunters, troll hunters” and “ways of identifying memes,” she said. What they don’t have is a foolproof way to “identify who the bad guys are. … What are they trying to do with their messaging? Who is vulnerable, and how can we help those who are vulnerable?”

With a Knight Foundation grant, she recently launched the Center for Informed Democracy and Social Cyber Security — or IDeaS — which will try to address some of those questions.

In May, a CMU team including Mr. Kaufman published a paper showing that cleverly designed “CAPTCHA” systems — tasks you do to prove you’re not a robot — can subtly alter the mood of online commenters. Ask the would-be commenter to use the mouse to draw a smiley face before weighing in on a politically charged blog post, and you tend to get users “even more engaged with the topic, but expressing their opinions in a more positive way,” he said.

His team is experimenting with other tools that might change the tone on social media and in multi-player computer games, where bias against people who aren’t straight, white males often “runs rampant,” he said.

He is not confident, though, that this approach would work on Gab.com — the accused Tree of Life shooter’s favorite social media site — or on 8chan, where shooters reportedly posted prior to racially driven attacks in Poway, Calif., El Paso, Texas, and Christchurch, New Zealand.

“I’m not sure the approaches that we’ve used would be that effective in those spaces,” he said.

Some of Big Tech’s early attempts may have drawbacks.

Since mid-2018, some social media platforms have set standards for speech and tried a variety of methods — from banning violators to quarantining them in hard-to-find corners — to discourage violent and hateful speech.

“If you crack down, you may drive people away,” said Carolyn P. Rose, a professor at CMU’s Human-Computer Interaction Institute and its Language Technologies Institute. “It may look like you contained the problem. But you might actually cause a breakdown in communication between the two sides” of the ideological spectrum.

Extremists driven from Twitter to Gab or 8chan might have dramatically less exposure to other viewpoints, and might be immersed in more radical ideas, she said.

She’s using a database of online interactions to study the characteristics of civil conversations between people of different ideological viewpoints. If researchers can figure out what makes people from different parts of the political spectrum click with each other, then the algorithms that recommend new followers could be tweaked in ways that would connect people across ideological divides, rather than strengthening the walls of our echo chambers.

“If we foster exchange between people who align differently in their political views,” she said, “then it at least keeps the communication lines open.

“Companies like Google have this very much on their radar. They realize that there’s actually something to be gained by not just trying to eradicate the unsavory behaviors, but to actually foster positive behavior.”

Rich Lord: rlord@post-gazette.com or 412-263-1542

First Published: October 21, 2019, 11:00 a.m.