It’s happened to the best of us: You’re dribbling the basketball down the court when all of a sudden you realize it appears to be stuck to your fingers.

Or, you release the ball and watch it move in a pretty impossible trajectory from your hand to the surface of the court.

Well, that mostly only happens in the virtual world of “NBA 2K” video games.

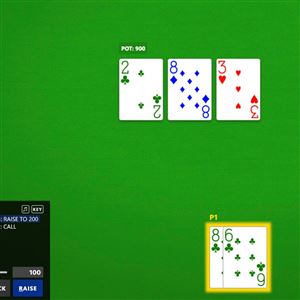

Those games are state-of-the-art. The avatars have pretty natural motion, sure, but they still contain some artifacts — essentially computer science lingo for glitches.

New Carnegie Mellon University research uses a form of deep learning to create physics-based character animations for video games. The aim is to create the most realistic movements possible.

“If we understand how people move, we can see if they’re moving in expected or unexpected ways,” said Jessica Hodgins, a co-author of the new study who also serves as a professor at CMU’s Robotics Institute and Computer Science Department.

That’s helpful in medicine or biomechanics, she said, because it helps in rehabilitation exercises. In the short term, though, Ms. Hodgins expects the research to be used commercially for video games.

The authors used deep reinforcement learning — a type of goal-oriented algorithm — to teach computers to dribble. Not unlike the 10,000-hour rule set forth by Malcolm Gladwell in his book “Outliers: The Story of Success,” it takes serious repetition to teach computers these moves. Mr. Gladwell’s 10,000-hour rule states that it takes as many hours of intentional practice to master a skill.

Outside motion capture footage of real people dribbling basketballs was used to teach the system. Over millions of trials, animated players learned to dribble between their legs, dribble behind their backs and do crossover moves. They also discovered how to transition from one skill to another.

All in all, the research took about two years to complete, but it took less than 24 hours to teach the computer each of these skills, despite the repetition.

It’s all about building “physics-based controllers” from motion capture data, said Ms. Hodgins.

A controller, in this case, is essentially a software program that directs the flow of data from one system to another (consider a video game controller that allows you to make decisions and share that with the game through a hand-held device).

So when you move your avatar dribbling down the court to the basket, the controller considers the current state of your character — such as the pose and the velocity at which you’re traveling — as well as the current state of the rest of the court before computing the way the basketball will roll from your character’s fingertips.

That ensures that, given the avatar’s motion, the ball moves in a physically plausible way. Rather than the ball appearing to stick to the player’s fingers, it might spin on the avatar’s fingertips. Those minute details are usually very difficult to capture.

Ms. Hodgins said she and co-author Libin Liu, chief scientist for DeepMotion, a Redwood City, Calif-based animation company, focused on basketball because the speed and agility required to play the game is significant.

“It’s challenging ... the character is able to do some pretty complicated manipulations as far as the tricks and dribbling,” she said. “And you have to remain balanced while doing these tricks with the ball.”

In the future, Ms. Hodgins said, a similar method may be used to train robots.

While there’s an added layer of complexity in robots — you must actually build the mechanism — they’re still subject to the real-world rules of physics that a video game avatar may override.

“Because we’re doing simulations, we don’t have torque limits that humans have that limit us,” Ms. Hodgins explained. “Even [with] an athlete, there’s a limit to the power source and how strong they may be.”

As for the next challenge? Ms. Hodgins says it’ll likely be soccer.

Courtney Linder: clinder@post-gazette.com or 412-263-1707. Twitter: @LinderPG.

Join the Tech.pgh Community!

Sign up for Courtney Linder's free newsletter.

First Published: August 7, 2018, 12:45 p.m.